Implementation details: From the original Transformer to GPT

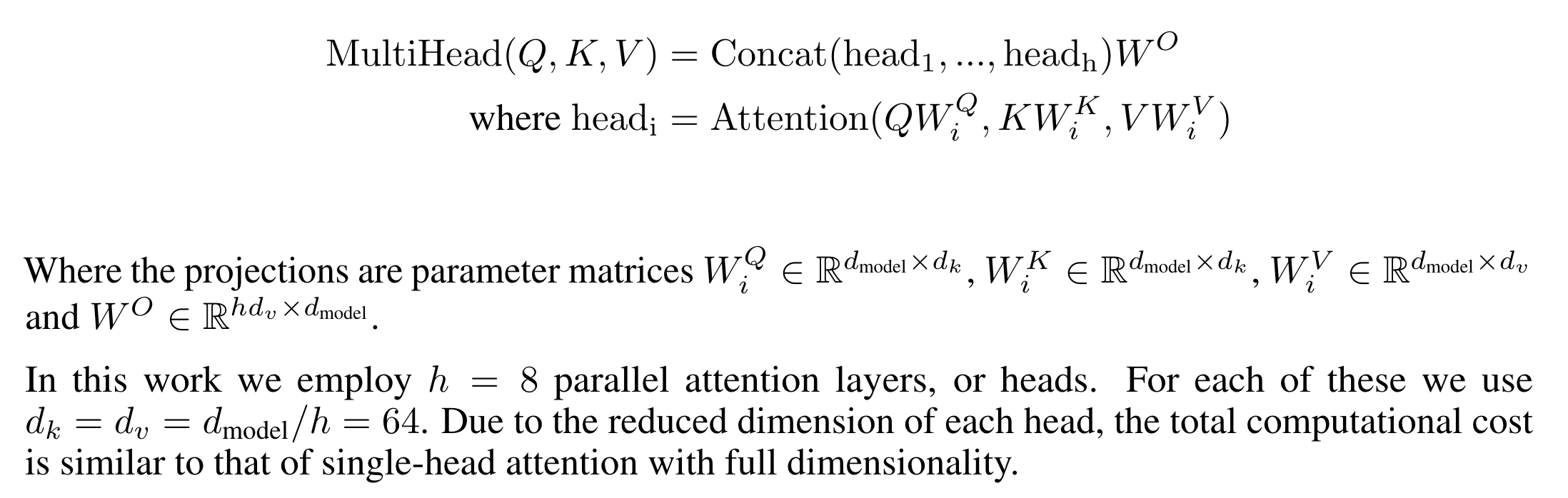

1 The original Transformers reviewed

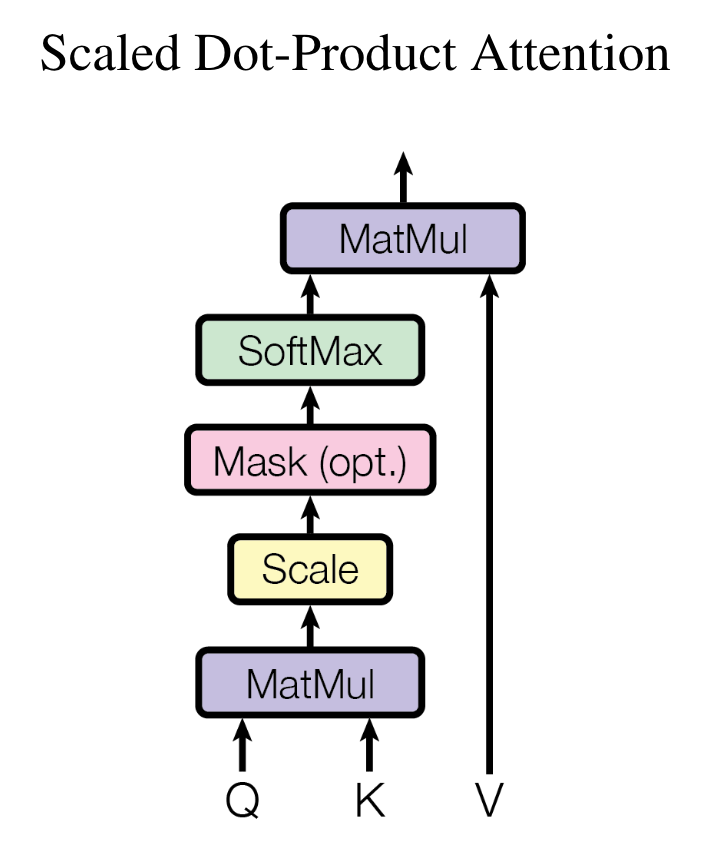

1.1 Attention block

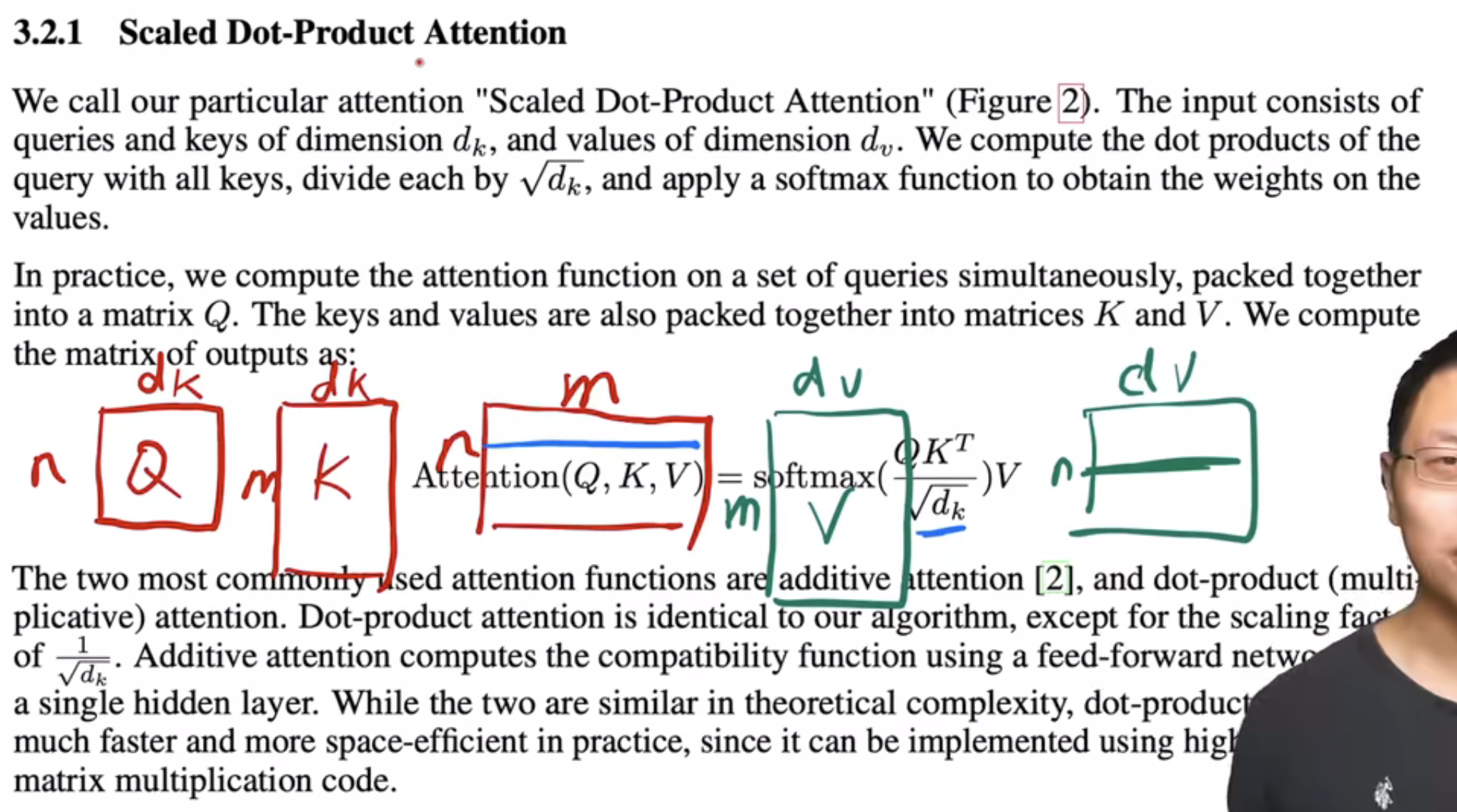

1.1.1 Scaled dot-product attention

- Query and the attention output are “one-to-one” mapped. For each query (a vector), we need to compute an output.

- So, if we have queries (i.e., Q is ), the output needs to be .

- The output for each query is a weighted sum of values.

- Softmax is applied to the rows of , i.e., sum(i,:)=1

When we compute , we need to compute many vector doc product of size by . When is large (e.g., 512, 4096), the variance of result become large. That is, if we select out one row (recall softmax is applied to rows) of , we will observe some elements are very small while some others are very large. If we apply softmax on this row, the result will be skewed towards either 0 or 1.

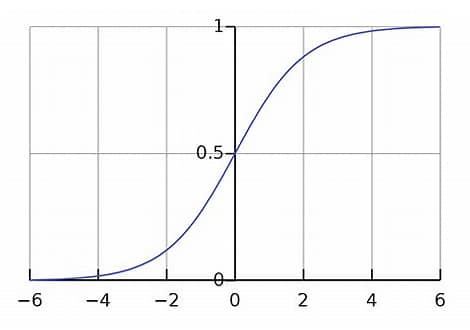

This leads to small gradient update. You can see the gradient at the two sizes of a sigmoid function is close to zero:

If we divide by , the result of softmax is closer to the center of the distribution, and the gradient become larger.

The brief explanation from the authors is:

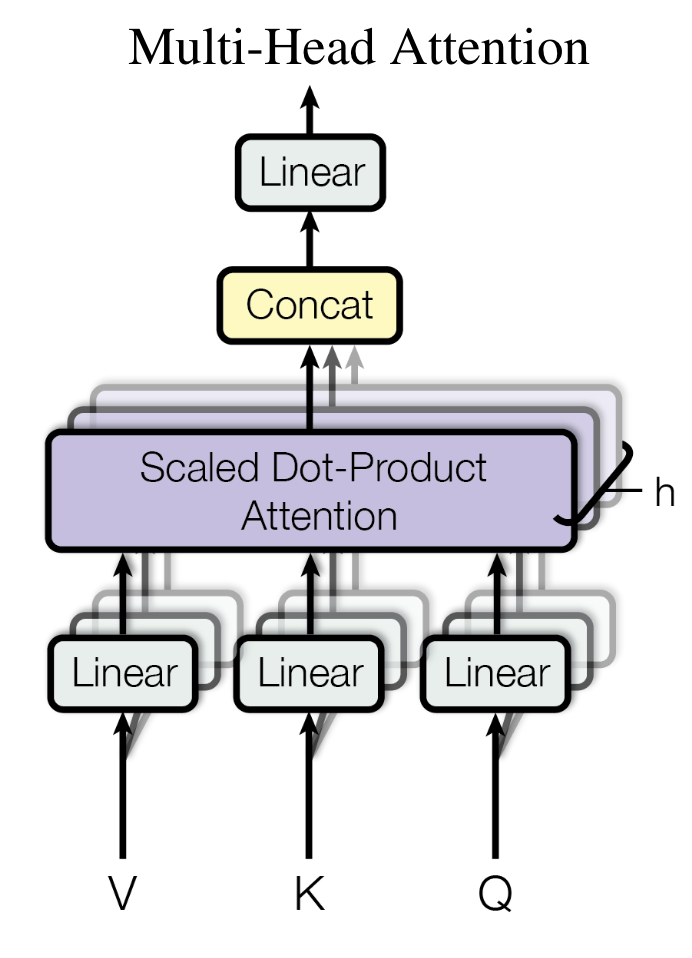

1.1.2 Multi-head attention (MHA)

If we only use dot-product attention, there’re no learnable parameters!

- Each head could learn different attention pattern.

- Each head is like a “filter” as in CNN

1.1.3 Cross attention (Encoder & decoder)

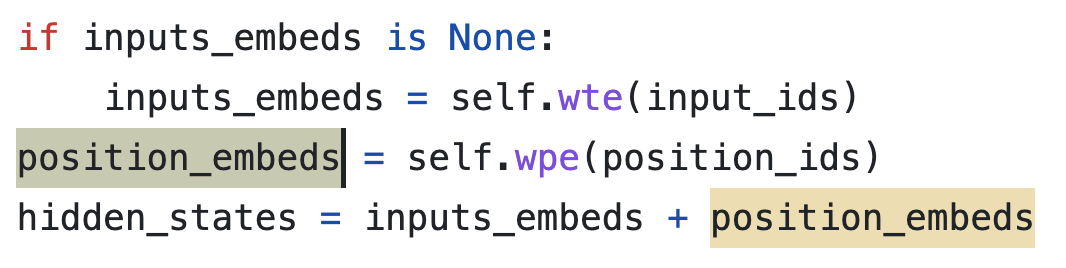

1.2 FFN block

FFN is simply an MLP applying to the last dimension. The output of the attention block is where is sequence length (num tokens). is the embedding of one token (a vector). What FFN does is: projects from to , and projects it back to .

1.3 Embedding and positional encoding

- In the original paper, the authors use the same embedding matrix in three places: 1) encoder, 2) decoder, and 3) the final FF layer before softmax (where for each token you produce V logits (V is the vocabulary size))

- embedding matrix:

- The authors also multiply the embedding matrix by

- An observation is that after the training, the l2 norm of a embedding vector is usually very small and doesn’t increase with . For example, when is increased from 512 to 4096, the l2 norm or the embedding may still be 1.

- However, the l2 norm of positional encoding DOES increase with length.

- So, if we don’t scale embedding, it will be dominated by the positional encoding when they’re added up.

1.4 Dropout

- Residual dropout: before added to the sublayer input (See “Drop1” and “Drop2” in the following pre-LN example. Note “Drop” is for FFN)

x + Drop1(MHA(LN(x)))(Attention sublayer)x + Drop2(Linear2(Drop(activation(Linear1(LN(x))))))(FFN sublayer)

- Attention dropout:

- It’s applied after softmax, before multiplying V (i.e., on the attention weights).

- Embedding dropout: after the sum of embedding and positional encoding

Drop(input_embed + pos_enc)

- FFN dropout: only for the hidden layer in FFN. (The “Drop” in the above example)

1.5 LayerNorm

- There’re two layernorm: pre-LN and **post-LN. In the original Transformer paper, the authors use post-norm, but GPT and later models prefer to uses pre-LN.

- Pre-LN make the gradient more smooth. See (Xiong et al., 2020).

# pre-norm (preferred)

x = x + MHA(LN(x))

x = x + FFN(LN(x))

# post-norm

x = LN(x + MHA(x))

x = LN(x + FFN(x))2 Pytorch implementaion of Transformer

last update: pytorch-2.0.1

2.1 Config

norm_first: If True, use pre-norm. Default: False (post-norm).dim_feedforward: default=2048activation: Default “relu”dropout: Default 0.1

2.2 Encoder

# How TransformerEncoderLayer.forward works

# x: input source

# Drop1, Drop2: residual dropout

# Drop: FFN dropout

# note: SA() doesn't include any dropout layer

# Pre-LN (preferred)

x = x + Drop1(SA(LN(x))) # Self-Attn sublayer

x = x + Drop2(Linear2(Drop(Activation(Linear1(LN(x)))))) # FFN sublayer

# Post-LN

x = LN(x + Drop1(SA(x))) # Self-Attn sublayer

x = LN(x + Drop2(Linear2(Drop(Activation(Linear1(x)))))) # FFN sublayer2.3 Decoder

# How DecoderLayer.forward works

# x: the "Q"

# memory: the "K and "V", from encoder

# Drop1, Drop2, Drop3: residual dropout

# Drop: FFN dropout

# note: SA() and MHA() doesn't include any dropout layer

# Pre-LN (preferred)

x = x + Drop1(SA(LN1(x))) # SA sublayer

x = x + Drop2(MHA(LN2(x), memory)) # Multi-Head Attn

x = x + Drop3(Linear2(Drop(Activation(Linear1(LN3(x)))))) # FFN sublayer

# Post-LN

x = LN1(x + Drop1(SA(x))) # SA

x = LN2(x + Drop2(MHA(x, memory))) # MHA

x = LN3(x + Drop3(Linear2(Drop(Activation(Linear1(x)))))) # FFN sublayer3 Compare with GPT

Below, I show how GPTs differ from the original Transformer. Since the GPT family is not open-source, the GPT code is from Huggingface’s implementation of GPT-2. OpenAI claimed GPT-3 uses the same architecture as GPT-2, except for the Sparse Transformer part.

- In the original paper, the decoder has three sublayers, SelfAttn, CrossAttn, FFN because it needs input from the encoder

- GPT-2 has no “CrossAttn”

- So, GPT’s decoder is equivalent to an encoder, except for the mask in the SelfAttn (See this SO post).

3.1 Tokenizer: A variant of BPE

- Works on byte, but avoid merges across character categories (e.g., punctuations and letters are not allowed to merge), except for spaces.

- e.g.,

"Hello world" => ["Hello", " world"]. Notice there’s a space in front of “world.”

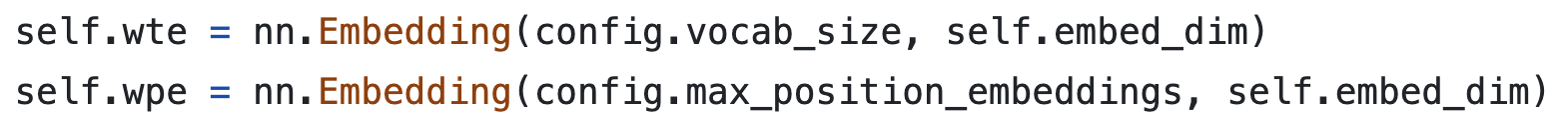

3.2 Embedding & positional encoding

- Both are learned

Source: Huggingface

- Embedding is not scaled before adding to positional encoding (PE)

- In the original Transformer,

input_embedsis multiplied by . That’s because the PE is determined by sin/cos (not learned!) and its l2 norm increases with - But in GPT, PE is learned. Therefore, PE and input_embeds can be of similar scale.

Source: Huggingface

- In the original Transformer,

3.3 LayerNorm

- It uses “pre-LN”

- Another LN is added after the final attention block

# first go through the blocks

for block in DecoderList:

x = block(x)

# the final LN before output!

output = LN(x) 3.4 Dropout

- GPTs have dropout in residual, embedding, and attention, same as the original Transformer (drop=0.1)

- GPT has no dropout in FFN!

3.5 Activation: GLUE

(Hendrycks & Gimpel, 2016) where is CDF of normal. Its expectation can be approximated with:

def glue(self, input: Tensor) -> Tensor:

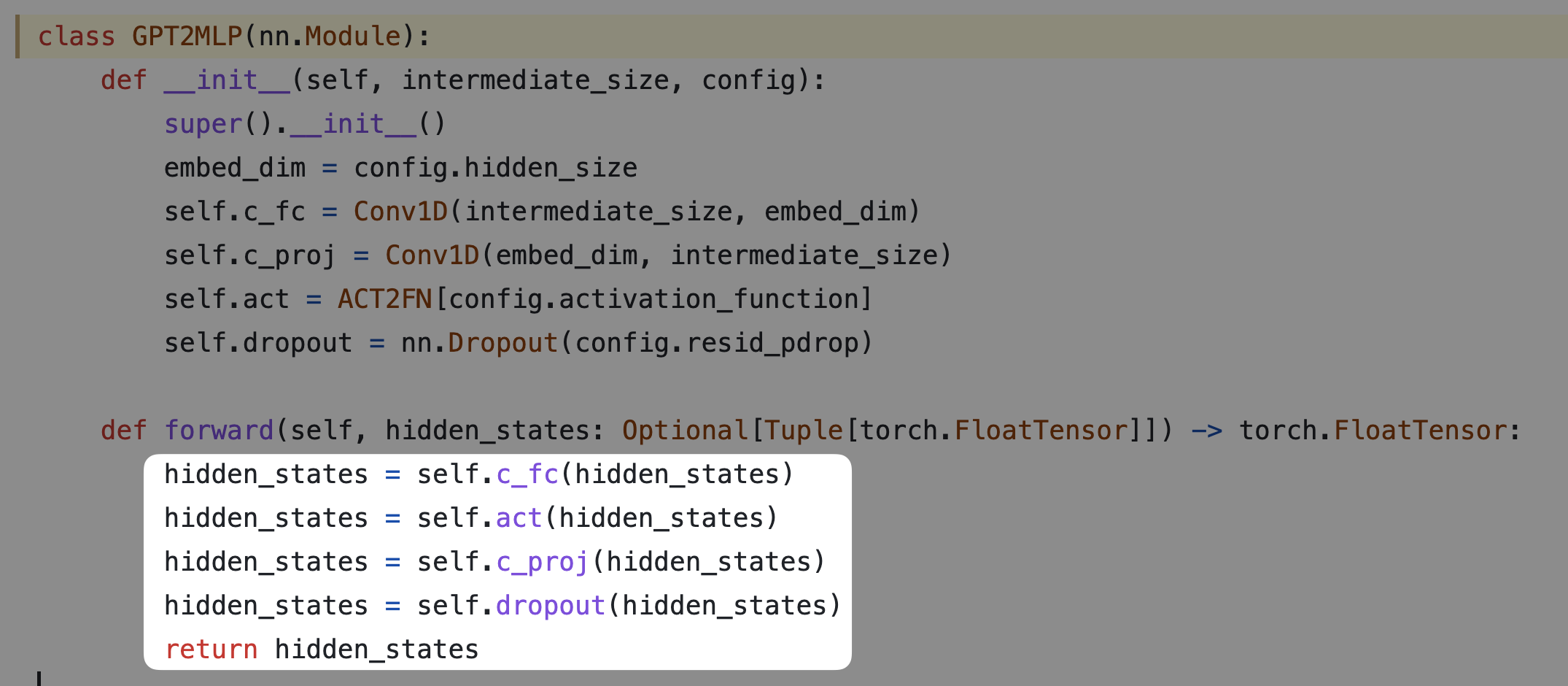

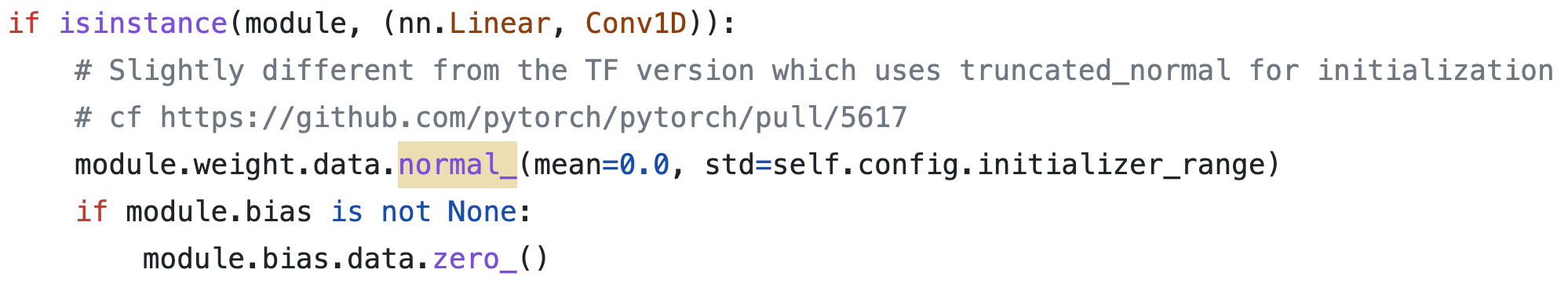

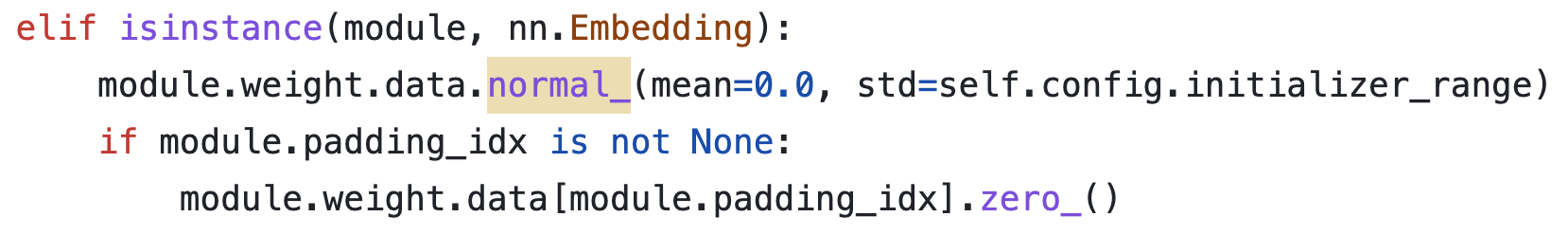

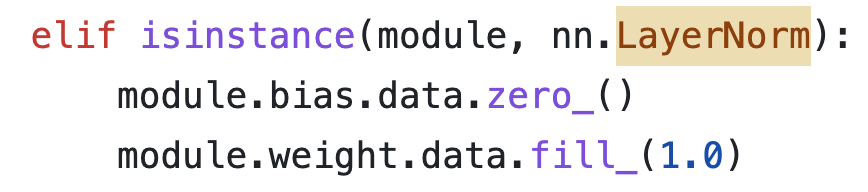

return 0.5 * input * (1.0 + torch.tanh(math.sqrt(2.0 / math.pi) * (input + 0.044715 * torch.pow(input, 3.0))))3.6 Initialization

- Linear and conv1d are normal with

mean=0, std=0.02

- Embedding & PE are normal with

mean=0, std=0.02; padding_idx is 0

- Layer norm has no affine transformation

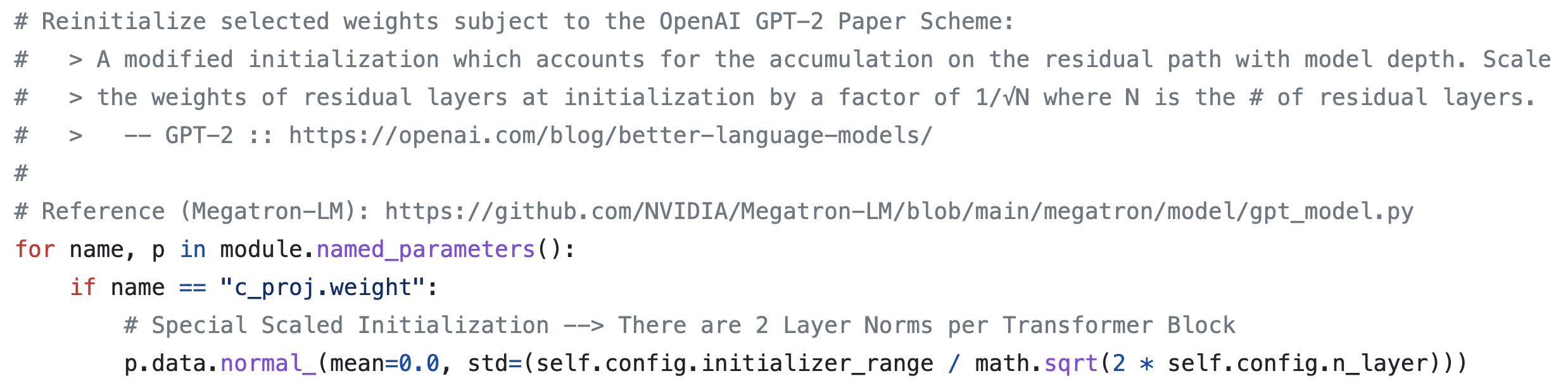

- (important!) Reinit selected weights

c_projis the matrix in the original paper, it’s . The concatenated attention outputs are multiplied by before sent to residual.- I still not fully understand why “training signals will accumulate through the residual path.”