How to Parse 10-K and 10-Q

1 The problem of extracting “items”

One common task when parsing 10-K/Q files is to extract “items” or “sections” from the filing. Typically, a 10-K filing has the following items:

- Business

- Risk factors

- Selected financial data

- Management’s discussion and analysis

- Financial statements and supplementary data

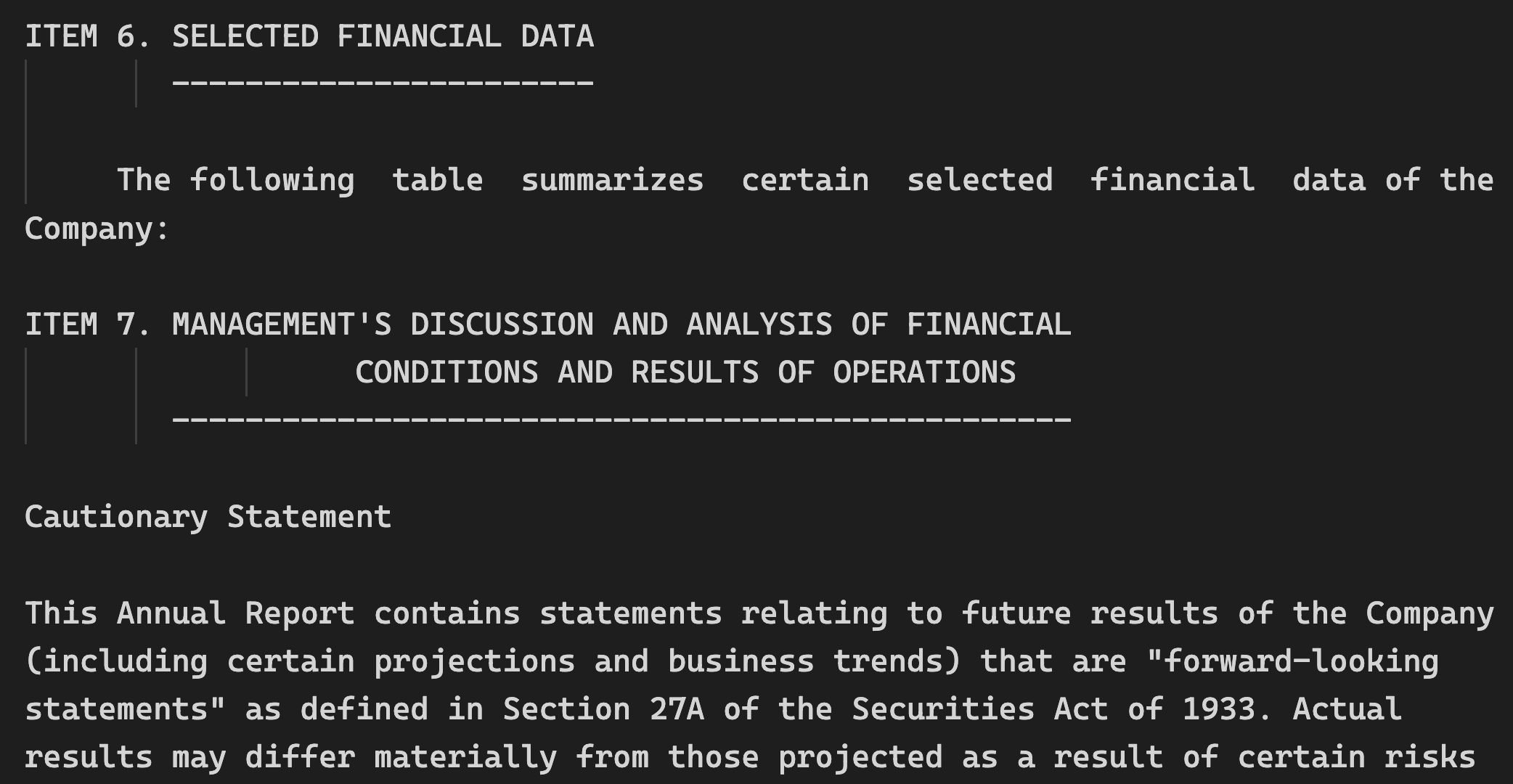

In finance research, the “Management’s Discussion” item receives special attention. However, there are no universal flags for each item, so researchers have to develop their own text extraction rules. An example is as follows:

This article provides example code to extract all major items from 10-K and 10-Q.

2 Where can I find “cleaned” 10-K/Q files?

Before parsing, we first need to clean the 10-X filings since the original EDGAR files contain many noisy HTML tags and special characters. Luckily, we don’t have to do this ourselves since there are two reliable sources for cleaned 10-X filings.

2.1 Option 1 (free): From Loughran–McDonald’s website

If you have studied the literature on company filings, then you must know Loughran and McDonald. They provide cleaned 10-K/Q filings on their website. The cleaning details can be found here.

2.2 Option 2 (paid): WRDS’s SEC suite

WRDS also provides their version of cleaned 10-X filings. It’s not free since your school must subscribe to the SEC suite before accessing the data. But WRDS’s data has several advantages:

- Not limited to 10-X. In addition to 10-K and 10-Q, WRDS provides cleaned versions of all filings on EDGAR. These cleaned filings come in

txtformat, totaling over 2 TB. - Frequent updates. WRDS cleans and updates the filings on a daily basis. So theoretically if a company files something on EDGAR, you can get the cleaned version the next day.

- Value-added products. WRDS also provides value-added products based on the SEC cleaned filings, such as n‑gram tables and sentiment.

2.3 Option 3 (paid): sec-api.io

sec-api.io is a paid service that provides fully parsed SEC filings. By fully parsed I mean you can directly query parsed items from it.

For example, if you want to get item 1A (Risk Factors) in clear text from Tesla’s recent 10-K filing, you can use the following HTTP query:

https://api.sec-api.io/extractor?url=https://www.sec.gov/Archives/edgar/data/1318605/000156459021004599/tsla-10k_20201231.htm&item=1A&type=text&token=YOUR_API_KEYSounds too good to be true, right? But wait, it’s not cheap. The monthly fee ranges from 50 to 240, depending on whether you’re an individual or a commercial entity. But the real deal‑breaker for me is that it caps monthly data usage at 15 GB. Since even ten years of 10‑K filings will be well over 15 GB, this service becomes impractical for me. A minor issue is that unlike WRDS or Loughran–McDonald, the parsing method of sec-api.io is not open‑sourced, so you cannot verify the results.

3 Python code to extract items

The key idea is to use RegEx.

There are no perfect regex rules. My current version has a failure rate < 0.5%.

There’s another, perhaps more accurate version tailored for ITEM 7 in 10‑K. I didn’t use it since it’s too complicated. But you may find it useful: Using Regular Expressions to Search SEC 10K Filings - HDS (highdemandskills.com)

My method borrows heavily from edgarParser/parse_10K.py at master · rsljr/edgarParser (github.com)

The following code assumes you’re using Loughran–McDonald’s version of cleaned filings. The two functions, get_itemized_10k and get_itemized_10q, extract items from 10-K and 10-Q filings.

# get file path as dict[int, list[str]] where

# key is the year and value is the list of file paths

# break the text into itemized sections

def get_itemized_10k(fname, sections: list[str]=['business', 'risk', 'mda', '7a']):

'''Extract ITEM from 10k filing text.

Args:

fname: str, the file name (ends with .txt)

sections: list of sections to extract

Returns:

itemized_text: dict[str, str], where key is the section name and value is the text

'''

with open(fname, encoding='utf-8') as f:

text = f.read()

def extract_text(text, item_start, item_end):

'''

Args:

text: 10K filing text

item_start: compiled regex pattern

item_end: compiled regex pattern

'''

item_start = item_start

item_end = item_end

starts = [i.start() for i in item_start.finditer(text)]

ends = [i.start() for i in item_end.finditer(text)]

# if no matches, return empty string

if len(starts) == 0 or len(ends) == 0:

return None

# get possible start/end positions

# we may end up with multiple start/end positions, and we'll choose the longest

# item text.

positions = list()

for s in starts:

control = 0

for e in ends:

if control == 0:

if s < e:

control = 1

positions.append([s,e])

# get the longest item text

item_length = 0

item_position = list()

for p in positions:

if (p[1]-p[0]) > item_length:

item_length = p[1]-p[0]

item_position = p

item_text = text[item_position[0]:item_position[1]]

return item_text

# extract text for each section

results = {}

for section in sections:

# ITEM 1: Business

# if there's no ITEM 1A then it ends at ITEM 2

if section == 'business':

try:

item1_start = re.compile("i\s?tem[s]?\s*[1I]\s*[\.\;\:\-\_]*\s*\\b", re.IGNORECASE)

item1_end = re.compile("item\s*1a\s*[\.\;\:\-\_]*\s*Risk|item\s*2\s*[\.\,\;\:\-\_]*\s*(Desc|Prop)", re.IGNORECASE)

business_text = extract_text(text, item1_start, item1_end)

results['business'] = business_text

except Exception as e:

print(f'Error extracting ITEM 1: Business for {fname}')

# ITEM 1A: Risk Factors

# it ends at ITEM 2

if section == 'risk':

try:

item1a_start = re.compile("(?<!,\s)item\s*1a[\.\;\:\-\_]*\s*Risk", re.IGNORECASE)

item1a_end = re.compile("item\s*2\s*[\.\;\:\-\_]*\s*(Desc|Prop)|item\s*[1I]\s*[\.\;\:\-\_]*\s*\\b", re.IGNORECASE)

risk_text = extract_text(text, item1a_start, item1a_end)

results['risk'] = risk_text

except Exception as e:

print(f'Error extracting ITEM 1A: Risk Factors for {fname}')

# ITEM 7: Management's Discussion and Analysis of Financial Condition and Results of Operations

# it ends at ITEM 7A (if it exists) or ITEM 8

if section == 'mda':

try:

item7_start = re.compile(r"item\s*7\s*[\.\;\:\-\_\s]*?(management(?:['’]|\s)?s\s+discussion|md\s*&\s*a)", re.IGNORECASE)

item7_end = re.compile(r"(?:item\s*7a\s*[\.\;\:\-\_\s]*?(?:quant|qualit))|(?:(?:part\s*ii\s*of\s*)?item\s*8\s*[\.\,\;\:\-\_\s]*(?:financial|finan))", re.IGNORECASE)

item7_text = extract_text(text, item7_start, item7_end)

results['mda'] = item7_text

except Exception as e:

print(f'Error extracting ITEM 7: MD&A for {fname}')

# ITEM 7A: Quantitative and Qualitative Disclosures About Market Risk

#

if section == '7a':

try:

item7a_start = re.compile("item\s*7a\s*[\.\;\:\-\_]*[\s\n]*Quanti", re.IGNORECASE)

item7a_end = re.compile("item\s*8\s*[\.\,\;\:\-\_]*\s*Finan", re.IGNORECASE)

item7a_text = extract_text(text, item7a_start, item7a_end)

results['7a'] = item7a_text

except Exception as e:

print(f'Error extracting ITEM 7A: for {fname}')

return results

def get_itemized_10q(fname, sections: list[str]=['mda']):

'''Extract ITEM from 10k filing text.

Args:

fname: str, the file name (ends with .txt)

sections: list of sections to extract

Returns:

itemized_text: dict[str, str], where key is the section name and value is the text

'''

with open(fname, 'r') as f:

text = f.read()

def extract_text(text, item_start, item_end):

'''

Args:

text: 10K filing text

item_start: compiled regex pattern

item_end: compiled regex pattern

'''

item_start = item_start

item_end = item_end

starts = [i.start() for i in item_start.finditer(text)]

ends = [i.start() for i in item_end.finditer(text)]

# if no matches, return empty string

if len(starts) == 0 or len(ends) == 0:

return None

# get possible start/end positions

# we may end up with multiple start/end positions, and we'll choose the longest

# item text.

positions = list()

for s in starts:

control = 0

for e in ends:

if control == 0:

if s < e:

control = 1

positions.append([s,e])

# get the longest item text

item_length = 0

item_position = list()

for p in positions:

if (p[1]-p[0]) > item_length:

item_length = p[1]-p[0]

item_position = p

item_text = text[item_position[0]:item_position[1]]

return item_text

# extract text for each section

results = {}

for section in sections:

# ITEM 7: Management's Discussion and Analysis of Financial Condition and Results of Operations

# it ends at ITEM 7A (if it exists) or ITEM 8

if section == 'mda':

try:

item2_start = re.compile("item\s*2\s*[\.\;\:\-\_]*[\s\n]*Man", re.IGNORECASE)

item2_end = re.compile("item\s*3\s*[\.\;\:\-\_]*[\s\n]*Quanti", re.IGNORECASE)

item2_text = extract_text(text, item2_start, item2_end)

results['mda'] = item2_text

except Exception as e:

print(f'Error extracting ITEM 2: MD&A for {fname}')

return results